Parallel Image Filtering

Role Overview

Project Time: November 2022 - December 2022

Role: Developer

Language: Python

Dependencies: PIL (Pillow)

I worked closely with a partner as a developer to create this application for a school project. What makes this different from your standard image filtering program, is that this implementation employs parallelism techniques. This enables the program to process more images at a lesser time.

Concept

Although it may seem like adjusting images is as simple as adding numbers to the current values of the image, a lot more is going on. Thousands of pixels are being manipulated. This could take quite some time for the process to finish, especially for huge images and multiple queries, where multiprocessing and threading come into play. Usually, machines read instructions sequentially, but parallel programming techniques allow the machine to run multiple instructions simultaneously, leading to remarkable performance gains.

This project focuses on designing and implementing an Image Enhancement program using parallel programming to improve performance and efficiency. The program takes the following input from the user:

- Folder location of images

- Folder location of enhanced images

- Enhancing time in minutes

- Brightness enhancement factor

- Sharpness enhancement factor

- Contrast enhancement factor

Once the input has been parsed, the program will enhance the images according to the input enhancement factors, and will be outputted in the folder location for processed images. A text file containing summary statistics will also be generated for further analysis.

Task Decomposition, Task Assignment, and Agglomeration

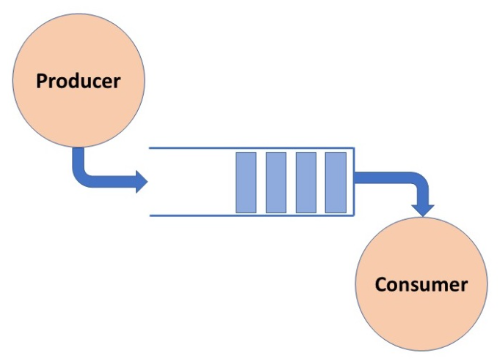

The foundation of the implementation is heavily based on the producer-consumer problem as illustrated below. The program has two main tasks, with each task assigned to a child process: (1) to retrieve the input, and; (2) to process the images. The producer is in charge of retrieving the images from its folder, and parsing the image in preparation for processing. Once done, the producer will append the parsed images to the shared resource buffer. On the other hand, the consumer is in charge of processing each image from said buffer and outputs them to their respective folder. In essence, each process will be handling the same amount of data but with different tasks.

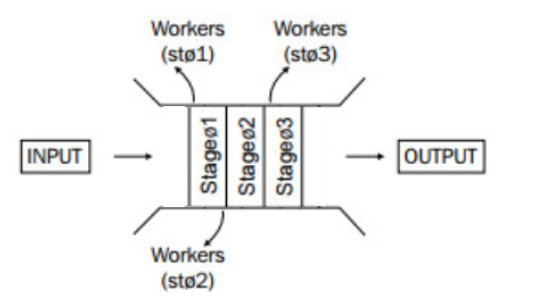

The number of tasks is known to increase as the problem size increases. Ideally, each task can be provided with one worker process to improve performance. However, interprocess communication can also cause overhead and slow the program down. It is important to group smaller tasks together to increase the locality of the algorithm and minimize the possibility of a performance decline. Thus, the job of the consumer process can be further divided into three (3) sub tasks:

- Stage 1: Application of Brightness Filter: enhances the image according to the input brightness enhancement factor

- Stage 2: Application of Contrast Filter: enhances the image according to the input contrast enhancement factor

- Stage 3: Application of Sharpness Filter: enhances the image according to the input sharpness enhancement factor

As previously discussed, once the producer retrieves an image, it also parses the image and transforms the input into a usable format for processing. With this, it can be assumed that the consumer already receives a clean input which removes the need for adding cleaning as a separate sub task.

In this scenario, the input represents an item retrieved from the shared resource buffer. Each stage is assigned a separate worker thread to divide the workload for manipulating the image within the consumer process. Once each worker thread is done with their task, they join the consumer process again and the image is outputted to the provided location for processed images.

Mapping

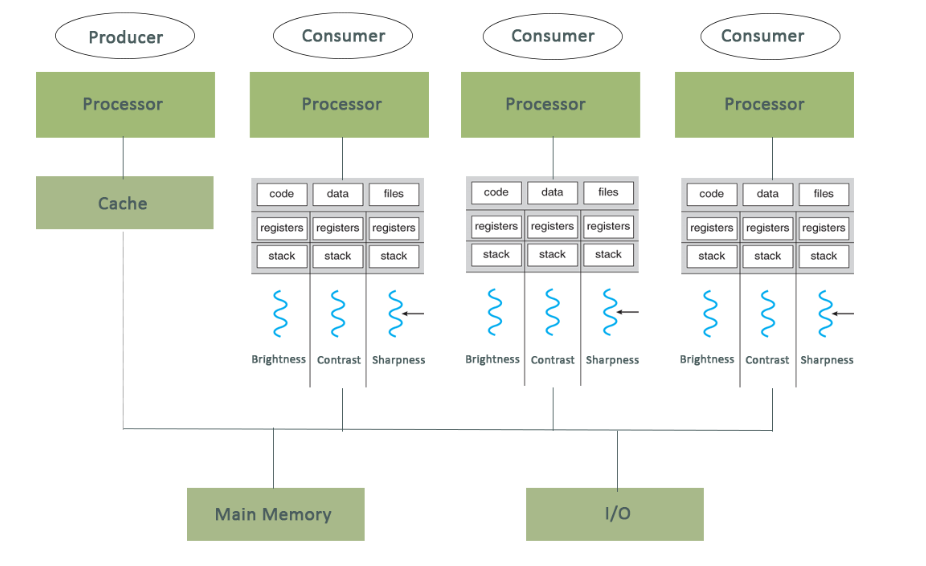

Multiprocessing uses multiple processors to execute processes concurrently. Each process runs in parallel with each other, but with no determined order. This means the processes will run in no fixed time and will finish in no particular order. Multiprocessing puts multiple processors to use, and each of these processors can run instructions while another does the same. With multiple processors working in parallel, completion of multiple tasks would be much faster as compared to just one processor working on several tasks. The Image Enhancer problem essentially has a static number of tasks. With this, a structured communication was chosen as a mapping heuristic.

In a structured communication, one task will be assigned to each available processor as seen in figure 5. With the given design, since tasks have already been agglomerated in the previous stages of the design phase, each processor will only be dealing with either a producer or consumer process, but not both. Depending on the number of available processors, it is possible to increase the number of consumer processes and let them work on subsections of the dataset. The given diagram illustrates how each process will be mapped in a quad-core machine.

Results

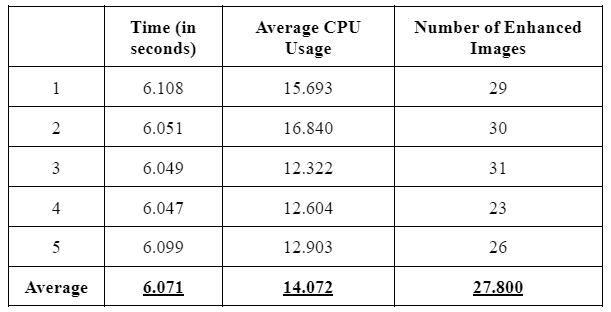

To test the speed and efficiency of our design, we have prepared a set of 60 images in .jpg and .png format. We have used the same input for the succeeding requirements to maintain consistency and minimize the factors that could affect the experiment results. It is also important to note that the machine we used to run the program has a six-core processor.

To have a baseline comparison, we first tested the same image enhancement program with no parallelism techniques involved. We ran the same program five times and computed the mean execution time in seconds, and average CPU usage. The sequential implementation was able to successfully manipulate an average of 27.8 images in 6.07 seconds, with an average 14.07% CPU usage.

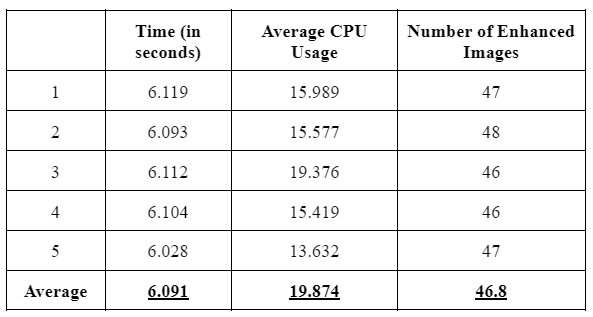

We then ran the Image Enhancement program using parallel programming techniques, but only utilized two processors at most. To do this, we spawned one process for the producer, and one process for the consumer. This implementation was able to run without any errors, and was able to enhance an average of 46.8 images for an average 6.091 seconds and 19.874 CPU usage.

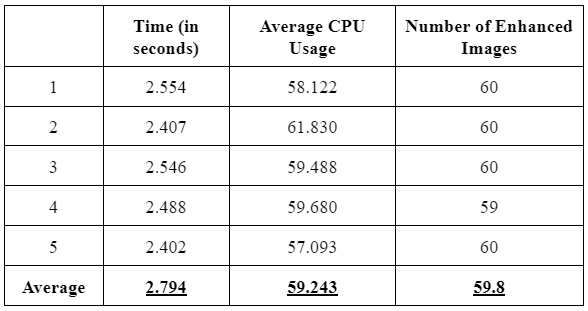

Lastly, we ran the Image Enhancement program using parallel programming techniques using all of the available processors within the machine. To do this, we spawned one process for the producer, the five processes for the consumer. It is important to note that since the number of input images is 60, this means that each consumer process will be manipulating 12 images only. But since the consumers are running in parallel, it should be able to run much faster theoretically. This implementation was able to run without any errors, and was able to enhance an average of 59.8 images for an average of 2.794 seconds and 59.243 CPU usage.

Comparing speed-up

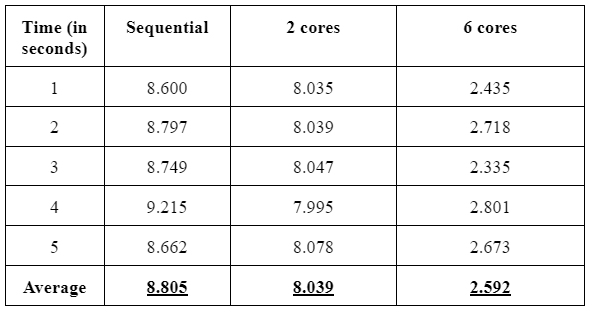

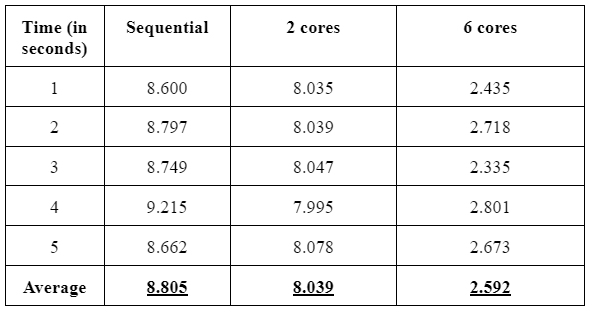

To compare the speed-up between the three implementations, it must be ensured that each execution processes the same data to minimize the bias and inconsistencies. Thus, for this experiment, we removed the input for enhancement time temporarily.

First, we measured the ratio between the execution time of the sequential implementation and the parallel implementation using two cores to calculate the speed-up improvement of the first implementation. We found that the parallel implementation was able to speed up the program 1.095x.

Next, we measured the ratio between the execution time of the sequential implementation and the parallel implementation using six cores to calculate the speed-up improvement of the second implementation. We found that the second implementation was able to speed up the program 3.396x.

Comparing speed-up

While speedup measures how much faster a parallel implementation can be, efficiency measures how effective the program in utilizing the processors. To calculate this, we simply divide the calculated speedup to the number of cores used. Similar to the previous experiment, we also temporarily removed the input for enhancement time to ensure that each implementation will process the same set of data.

From here, we found that the efficiency was measured to be at 54.7% for the first implementation. This means that more than half the time, the CPU is being used by the program over the course of its execution.

Following the same formula, we found that the second implementation using all the available cores has an efficiency of 56.6%. Similar to the previous implementation, the CPU is being used by the program over the course of its execution more than half the time.

Analysis

To get a better grasp of the improvements, the Image Enhancer was implemented with no parallelism techniques involved as our fixed point for comparison. This variation of the Image Enhancement program – the sequential implementation – was able to manipulate an average of 27.8 images in 6.07 seconds, with an average of 14.07% CPU usage.

Now using parallelism techniques, the second implementation of the Image Enhancer used two cores with the use of one for the producer process and one for the consumer process. This implementation was able to manipulate an average of 46.8 images with an average time of 6.091 seconds and a slight increase in CPU usage average coming in at 19.874%.

Comparing the corresponding averages of this implementation and the baseline implementation there is an improvement in terms of speed, and was 1.095x faster than our baseline. The time difference seems small in comparison to our baseline – with only around half to three-fourths of a second difference – and could perhaps be more useful with bigger data sets. The efficiency rate of this implementation averages at 16.18%.

The last implementation made use of more cores for the image enhancer using all the available processors in the machine, the difference from the previous implementation being the spawning of 5 consumers instead of 1. Again, by comparing the corresponding averages to the baseline, this Image Enhancer was able to manipulate an average of 59.8 images, and is considerably much faster than both the baseline and the two core implementations with an average time of 2.794 seconds and, in turn, an average CPU usage of 59.243%. It is worth noting that this implementation is 3.39x faster than the baseline. Moreover, the efficiency rate of this implementation averages at 56.6%.

Looking back we can see that multiprocessing improves the speed of the Image Enhancer. With the use of no multiprocessing at all, the sequential program – using only one processor for all instructions – was able to process less images as opposed to the latter implementations. Using two cores, the program was able to process a rough addition of about 20 images from the previous implementation. Although it is not at all very different in terms of speed, the difference is still notable, and would be more noticeable in bigger image sets. By scaling up the number of processors used even more, using 6 processors – all the available processors in the machine – we speed up the Image Enhancer even more. This time around, the time it took for the Image Enhancer to manipulate an average of 59.8 images averaged at a mere 2.794 seconds, which is about 3 times faster than what it initially was using just one processor. This suggests that parallelism techniques allow more workload to be done, whilst simultaneously improving the speed of the program.

Moreover, parallelism ensures that efficiency is implemented within the program, allowing the available processors to work on chunks of data and instructions. From the experiment, we found that the six-core implementation was averaging at 62.498% efficiency, meaning that more than half the time, the CPU is processing instructions, rather than being left at idle.

The efficiency ratio between the two-core and six-core implementations are roughly the same, with only around a 2% difference. However, it must be noted that this is representative of the scaling property in parallel programming. This denotes that as the problem size and the number of processors increases, the program should be able to maintain or improve the efficiency. With this in mind, the six-core implementation was able to maintain and improve the efficiency a bit, suggesting that the parallel programming techniques utilized here was able to exemplify the scalable property of parallelism.

Summary

In conclusion, the Image Enhancer was developed using parallelism techniques in order to improve the performance, efficiency, and speed of the algorithm. The program was developed using multiprocessing, spawning multiple processes at the same time in order to divide the workload of enhancing the input images. A producer-consumer pipeline was followed, where the producer process deals with the retrieval of images from the input file path, and the consumer processes dealing with the manipulation of images and output production. Since processes are inherently independent, a manager object was created in order to share data and allow communication between the processes. A semaphore object was also used in order to ensure that only one process enters their critical sections at a time.

In the case of the consumer processes, the workload was further divided using multiprogramming techniques by spawning three threads, where each thread is in charge of a specific filter (brightness, contrast, or sharpness). To synchronize this, a semaphore was also used to ensure that all filters will be applied properly.

All in all, parallel programming techniques enable us to run programs faster and utilize the machine much more efficiently. In the two-core processor and the six-core processor implementation, multiprocessing was primarily used to increase the speed and efficiency of the Image Enhancer, utilizing much more CPU than it initially would if executed sequentially. As we increased the number of physical cores to use, the program was able to process more images at a remarkably faster pace. In essence, parallelism allows the program to maximize the potential of the CPU itself.

See more works